About me

I am currently pursuing my Master’s degree in Machine Learning at Carnegie Mellon University, and I’m seeking Machine Learning Engineer and Applied Research Internship opportunities for Summer 2026.

I earned my Bachelor of Science in Computer Engineering from the University of Illinois Urbana-Champaign (Highest Honors) and a Bachelor of Engineering in Electronic and Computer Engineering from Zhejiang University (Outstanding Graduate).

During my undergraduate studies, I was advised by Professor Volodymyr Kindratenko and conducted research in Professor Tong Zhang’s lab, where my work focused on compressing LLMs and their post-training. I worked at Tencent as an Applied Research Intern, where I architected a full-stack generative AI pipeline for 3D scene generation (powered by an LLM Agent) and implemented inference acceleration techniques to reduce overall latency.

Explore my projects and connect with me: https://beryex.github.io

Education

Carnegie Mellon University, Dec. 2026

Master of Science in Machine Learning, Incoming Student

University of Illinois Urbana-Champaign, Jun. 2025

Bachelor of Science in Computer Engineering, Highest Honors, GPA: 3.94/4.00

- Core Courses: Artificial Intelligence (A+), Machine Learning (A+), Computer Systems & Programming (A), Computer Systems Engineering (A), Intro to Algorithms & Models of Computation (A+), Data Structures (A+), Game Development (A+), Analog Signal Processing (A+), Digital Signal Processing (A+), Probability with Engineering Applications (A+)

- Awards: Dean’s List (Fall 2023 & Spring 2024)

Zhejiang University, Jun. 2025

Bachelor of Engineering in Electronic and Computer Engineering, GPA: 3.97/4.00

- Core Courses: Discrete Mathematics (A+), Linear Algebra (A), Calculus (A), Differential Equations (A)

- Awards: ZJU-UIUC Institute First-Class Academic Excellence Award (2023 & 2024), Zhejiang University First-Class Scholarship (2024)

Stanford University, Jun. 2024 - Aug. 2024

Non-Degree, Undergraduate Summer Visitor, GPA: 4.075/4.30

- Core Courses: Data Mining & Analysis, Convex Optimization

Research Experience

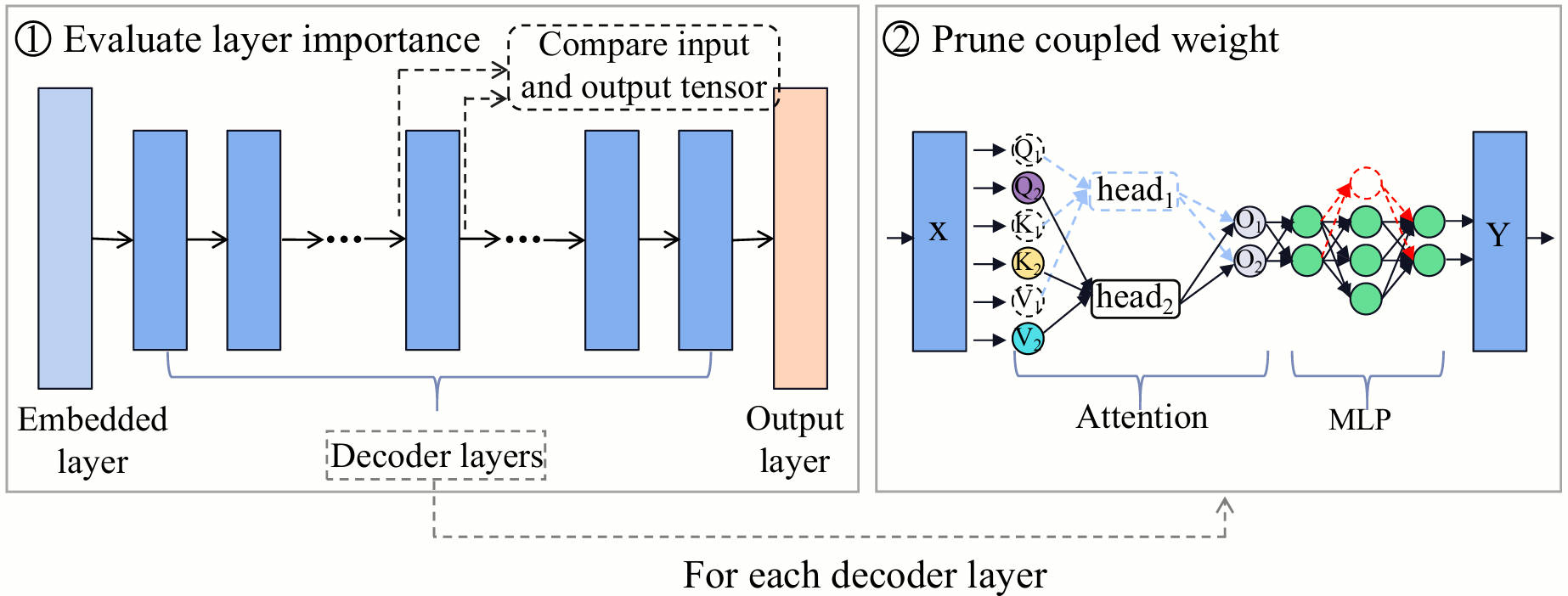

Adapt-Pruner: Adaptive and Structured Pruning for Efficient LLMs (Co-First Author), Preprint

Supervisor: Tong Zhang

- Proposed Adapt-Pruner, a method for structured pruning of LLMs that adaptively evaluates the importance of each decoder layer and assigns a corresponding sparsity level by measuring the distance between each layer’s input and output tensors

- Evaluated performance on LLaMA-3.1-8B, Qwen2.5-7B, and Gemma-2-9B, demonstrated 5% improvement in common sense benchmark scores over state-of-the-art methods including SliceGPT at 50% sparsity

- Compressed LLaMA-3.2-3B to 1.3B and post-trained on 0.05B tokens, outperforming TinyLlama-1.1 pretrained on 10B tokens, demonstrating structured pruning efficiently generates smaller models with far less computational resources

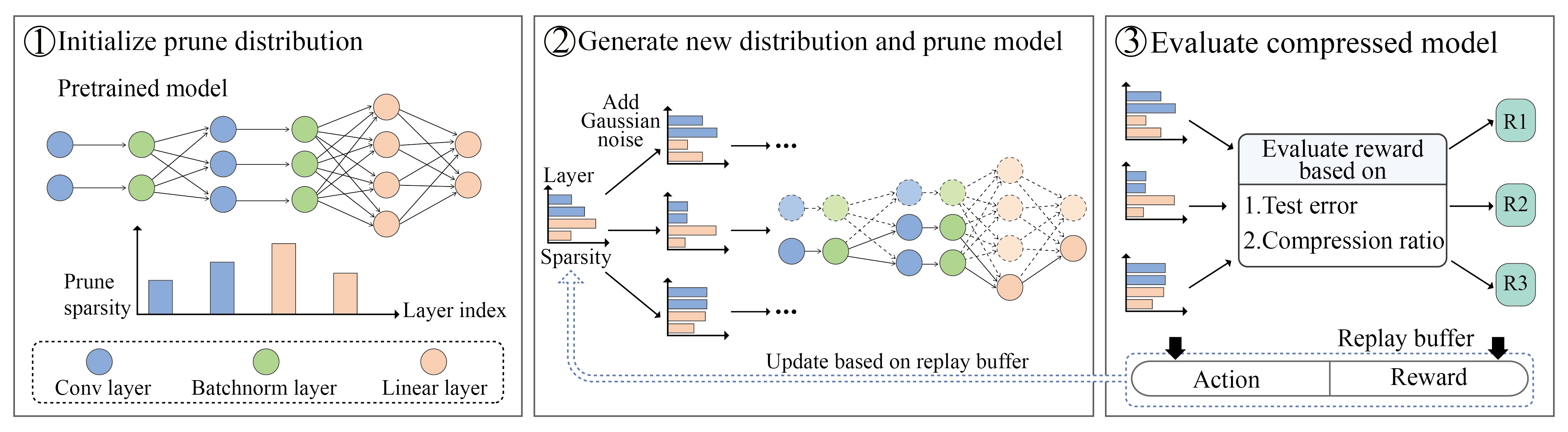

RL-Pruner: Structured Pruning Using Reinforcement Learning for CNNs (First Author), Preprint, Source Code

Supervisor: Volodymyr Kindratenko

- Developed an end-to-end approach to compress CNNs using structured pruning and Q-learning, with the accuracy of compressed models as the reward, which learned the optimal layer-wise pruning distribution to minimize performance loss

- Applied response-based Knowledge Distillation to post-train the compressed models, using the original uncompressed model as the teacher to transfer learned representations and efficiently recover accuracy lost during compression

- Independently implemented the entire framework in about 2,000 lines of PyTorch code and experimented on ResNet, GoogLeNet and MobileNet, achieving 81% parameter reduction on VGG-19 (CIFAR-100) within 1% performance drop

Professional Experience

Tencent, Applied Research Intern

- Implemented a full-stack generative AI pipeline for 3D content, encompassing the Unreal Engine C++ plugin, the Unity Editor plugin, and the Python backend service for automated interior layout generation within a room bounding volume

- Developed AI agents that leverage prompt engineering and Retrieval-Augmented Generation, enabling LLMs to generate 3D layouts by selecting appropriate items from the existing furniture database, and calling custom placement functions

- Fine-tuned the Qwen3-1.7B model via SFT and rule-based reward model RL training to enhance function calling ability and replace the Claude-3.7 API in the Python backend, accelerating scene generation by ~9x (4.6s to 0.5s/request)

Teaching Experience

Teaching Assistant, ECE 374: Intro to Algs & Models of Comp (ZJU), Instructor: Pavel Loskot

- Hosted a weekly 2-hour lab session for 20 students to review and reinforce class content

Course Assistant, CS 415: Game Development (UIUC), Instructor: Eric Shaffer

- Held office hours and mentored two project teams of 4 students each through their final projects

Projects

ML4Investment, Independent, Source Code

- Designed and implemented a comprehensive machine learning framework for U.S. stock price movement prediction, leveraging LightGBM for next-day price change forecasting, providing a cost-effective alternative to LLM-based solutions

- Developed an advanced, end-to-end ML pipeline integrating a multi-stage optimization strategy to systematically refine data sampling, select optimal features, and tune hyperparameters via multi-objective Optuna and time-series cross-validation

- Engineered a rigorous backtesting system that simulates investment strategies and evaluates performance using composite returns against optimal benchmarks, demonstrating a 138% actual gain over an 84-trading-day period

ECE 391 POSIX-compliant Unix-like Operating System, UIUC, Source Code

- Implemented a POSIX-compliant Unix-like operating system, featuring a terminal driver, real-time clock driver, and system calls, along with advanced capabilities like signal handling and dynamic memory allocation

CS 415 Game Development: The Final Boss, UIUC, Source Code

- Implemented an advanced action system for the main character featuring dynamic combo mechanics and environmental interactions, plus a context-aware NPC dialogue system that evolves based on game progression and player choices

STATS 202 URL Relevance Prediction, Stanford, Source Code

- Applied comprehensive feature engineering for data preprocessing, outlier removal, and valuable feature extraction, then leveraged various classification algorithms, including boosting techniques, to optimize URL relevance prediction

Research Statement

My research interests are primarily centered around efficient AI, focusing particularly on neural network compression and distillation. My work aims to compress CNNs and LLMs while preserving the knowledge and representations they have acquired. The goal is to reduce the number of parameters and accelerate inference speed without compromising performance, allowing these models to be deployed on local devices such as personal computers, mobile devices, and integrated into edge scenarios like vehicles, software, and video games. This approach ensures user privacy, reduces latency, and optimizes neural networks for specific applications.

Throughout my research, I have found that compressing LLMs presents unique challenges. Despite their large number of parameters and apparent redundancies, LLMs are often required to retain a vast breadth of knowledge. Given this, I believe that compressed LLMs are more suitable for scenarios where advance LLMs—already trained on extensive knowledge—are distilled into smaller models tailored for specific domains and deployed on corresponding equipment. For instance, a large model could be compressed by distilling it on a dataset focused primarily on cooking and kitchen knowledge, making it well-suited for deployment on specialized hardware in a kitchen environment.

From a broader perspective, I see the evolution of large language models as reminiscent of the early days of computing: initially, computers grew larger, eventually occupying entire rooms, until technological advancements allowed them to be compressed into powerful laptops. I hope that by compressing large language models, we can discover more efficient architectures and more effective representations of knowledge, enabling their deployment in practical and efficient applications that benefit society.

Miscellaneous

Since middle school, I have been fascinated by video games. Many titles, such as Red Dead Redemption 2 and Detroit: Become Human, not only entertained me but also allowed me to briefly experience other people’s lives and explore science fiction concepts. These experiences sparked my interest in science and made me realize that games can serve as a powerful medium for knowledge transfer. We spend a significant amount of time learning the knowledge accumulated by human civilization—could there be a way to accelerate this process and reduce the repetition across generations?

In my spare time, I focus primarily on developing independent games. Since April 2023, I have been working on my own game, and you can check out the early development version here. The current funding for this project comes entirely from the scholarships I received during my freshman and sophomore years, amounting to approximately $10,000. If you are interested in my work, feel free to reach out to me.